Introduction

If mobile robots are to become ubiquitous, we must first solve fundamental problems in perception. Before a mobile robot system can act intelligently, it must be given – or acquire – a representation of the environment that is useful for planning and control. Perception comes before action, and the perception problem is one of the most difficult we face.

We study probabilistic perception algorithms and estimation theory that enable long-term autonomous operation of mobile robotic systems, particularly in unknown environments. We have extensive experience with vision based, real-time localization and mapping systems, and are interested in fundamental understanding of sufficient statistics that can be used to represent the state of the world.

An important goal in mobile robotics is the development of perception algorithms that allow for persistent, long-term autonomous operation in unknown situations (over weeks or more). In our effort to achieve long-term autonomy, we have had to solve problems of both metric and semantic estimation. We use real-time, embodied robot systems equipped with a variety of sensors – including lasers, cameras, inertial sensors, etc. – to advance and validate algorithms and knowledge representations that are useful for enabling long-term autonomous operation.

Current projects

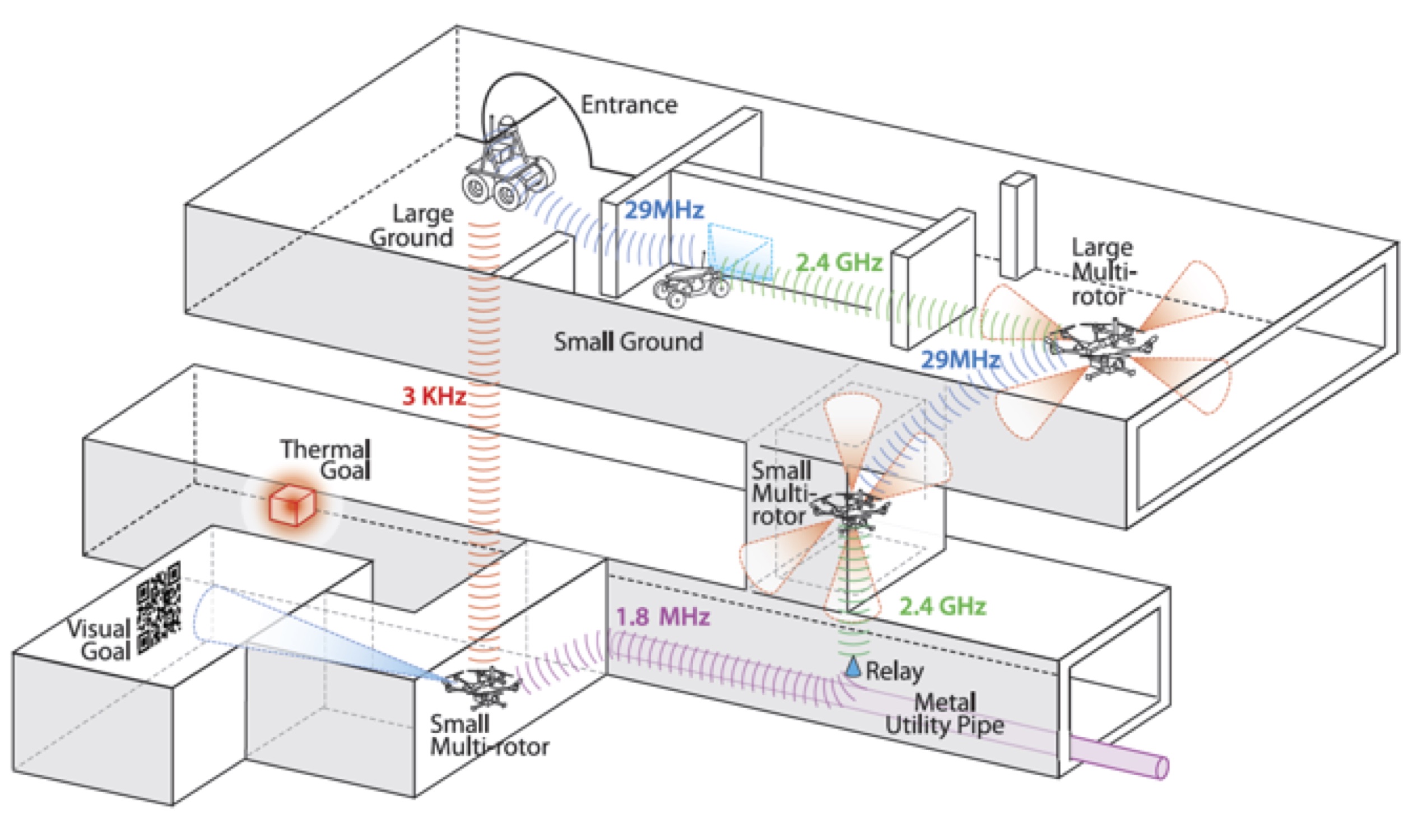

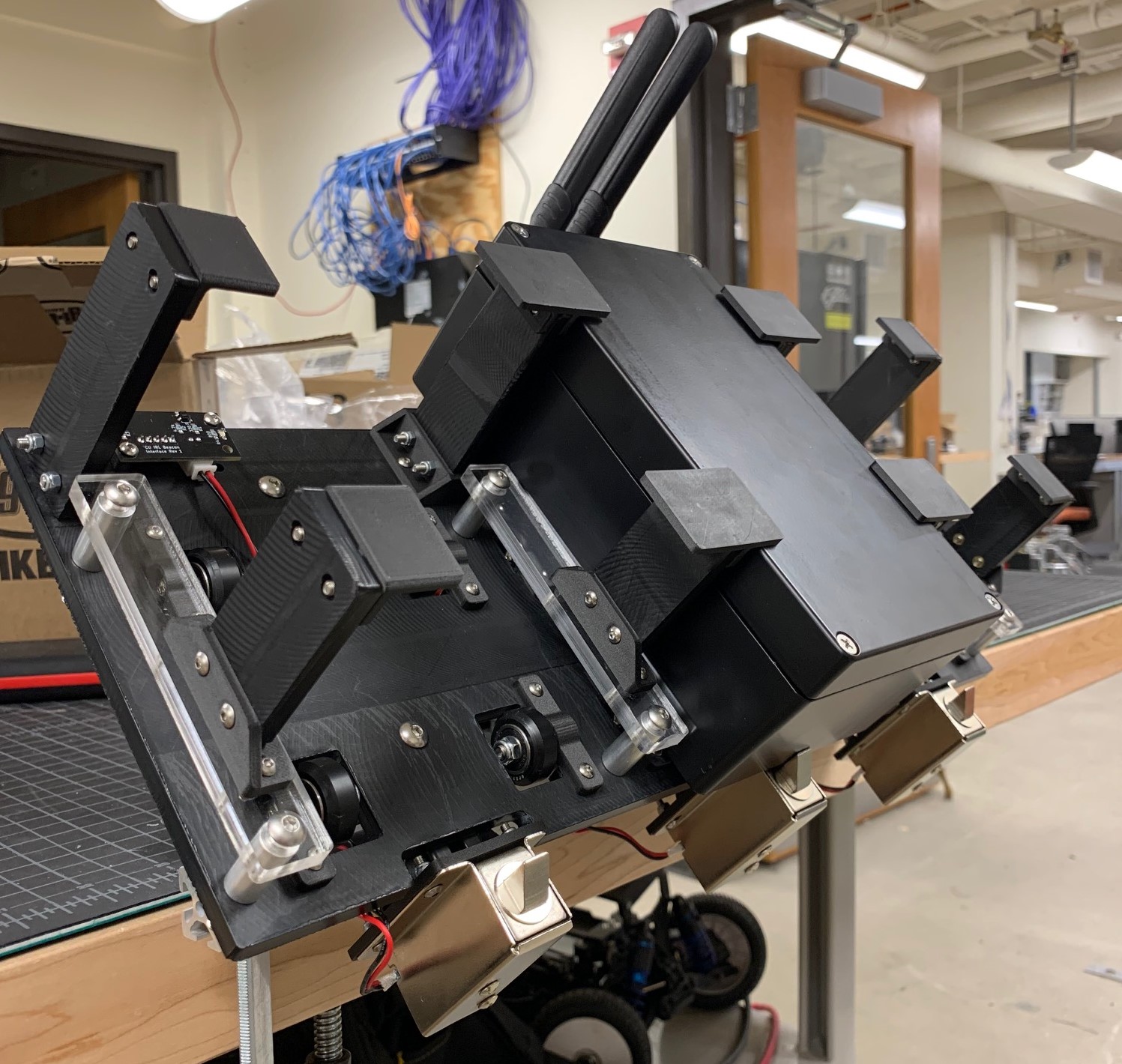

MARBLE: Multi-agent Autonomy with RADAR-Based Localization for Exploration

Project Page

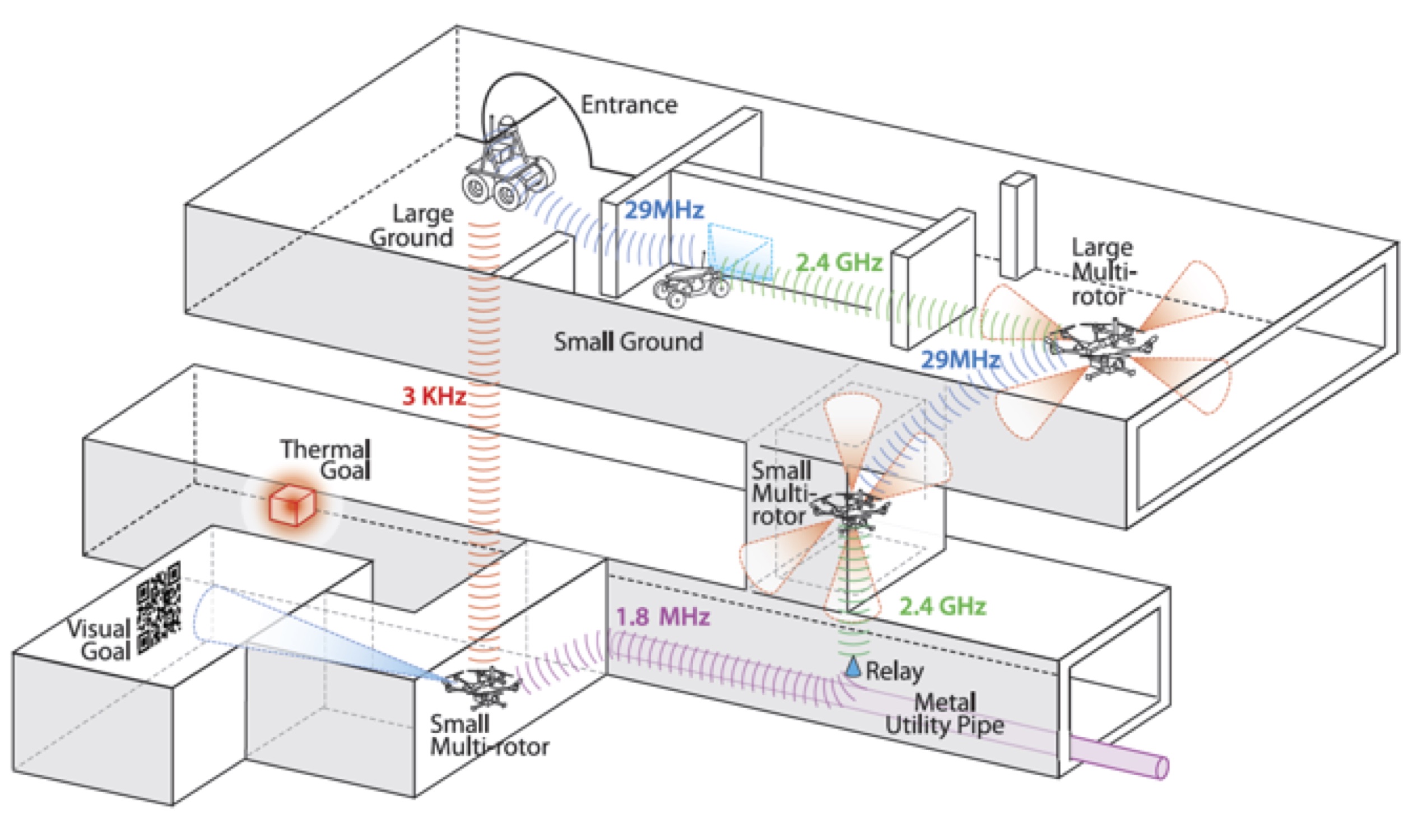

ARPG is a component of team MARBLE, a funded participant in the DARPA Subterranean Challenge. We are providing expertise in autonomy, perception, and navigation. The project kicked off in September 2018 and is ongoing, with competition events in August 2019 onward.

Funded by

-

DARPA TTO Subterranean Challenge

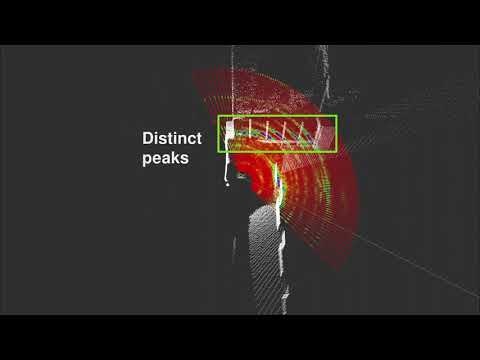

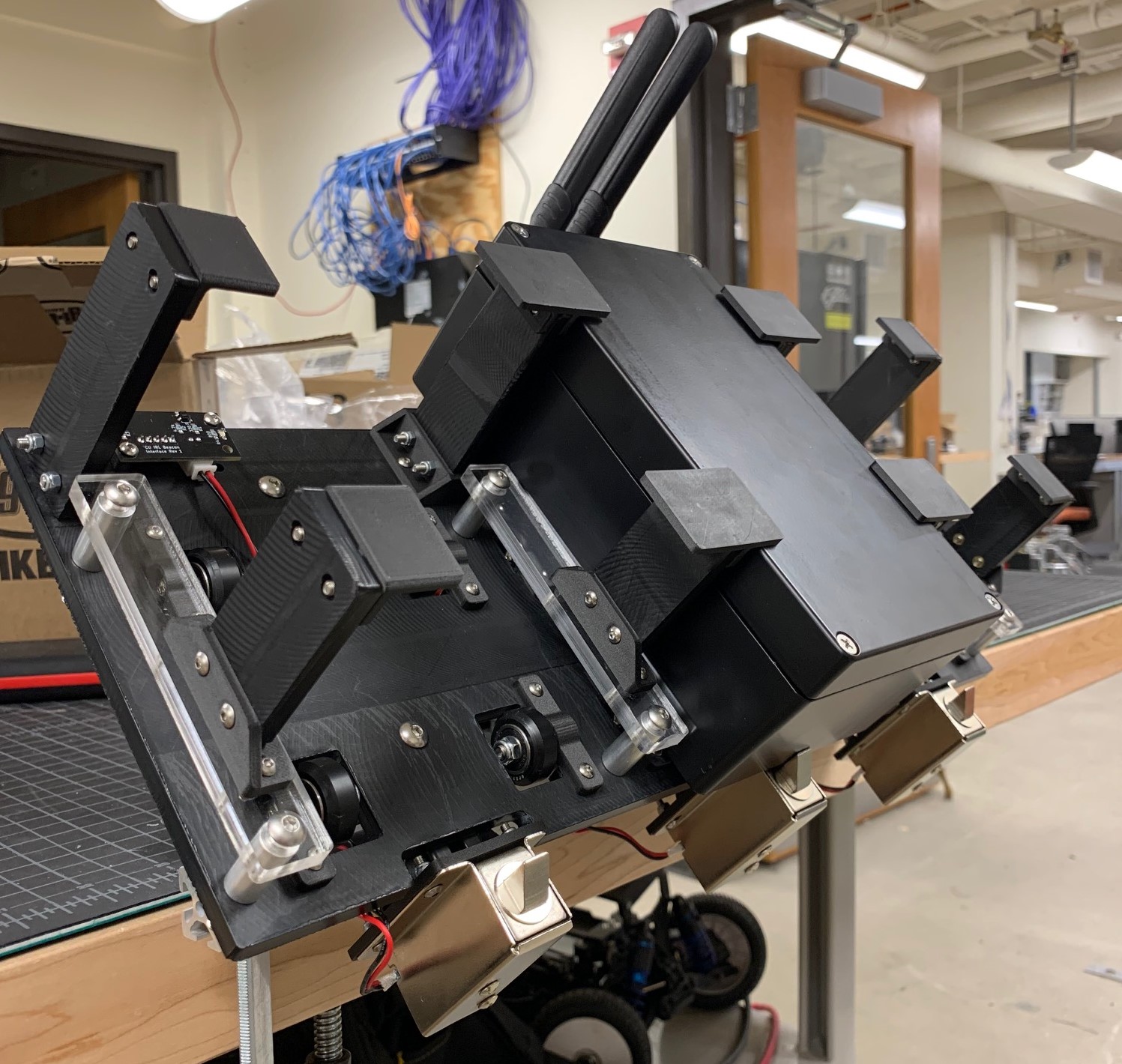

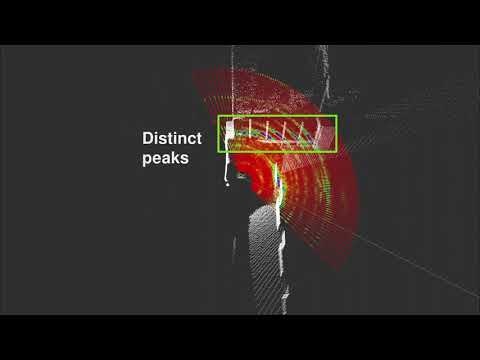

Robotic Perception With Millimeter Wave RADAR

Millimeter wave radar has become an increasingly valuable sensor in real-world robotics applications given the long wavelengths ability to bypass particulate matter like fog, smoke, and dust. Through various projects and datasets we hope to demonstrate how to leverage the unique characteristics and datatypes available with radar to solve problems for robotic perception in less than ideal conditions through a mixture of traditional and learned techniques.

Publications

-

Andrew Kramer, Kyle Harlow, Christopher Williams, Christoffer Heckman. "ColoRadar: The Direct 3D Millimeter Wave Radar Dataset". In International JJournal of Robotics Research 2021.

-

Andrew Kramer, Christoffer Heckman. "Radar-Inertial State Estimation and Mapping for Micro-Aerial Vehicles In Dense Fog". In International Symposium on Experimental Robotics 2020.

-

Andrew Kramer, Angel Santamaria-Navarro, Aliakbar Aghamohammadi, and Christoffer Heckman. "Radar-Inertial Ego-Velocity Estimation for Visually Degraded Environments". In International Conference on Robotics and Automation 2020.

Funded by

-

NSF-NRI #1830686: 'Life-Long Learning for Motion Planning by Robots in Human Populated Environments', DARPA TT0 Subterranean Challenge, NASA NSTRF 80NSSC18K1195

Multi Robot Communication Schemes

This project aims to develop a dynamic mesh network for difficult communication environments. Multi-agent exploration in such environments requires a software stack that is resilient to sudden mesh topology changes while carrying a wide range of data products. This requires a full stack implementation, including adapting single-robot communications solutions such as TCPROS to facilitate mesh-based multi-robot coordination and visualization of current network topology. Our approach allows prioritization of message transfer in a ROS environment, allowing large but not time-critical messages to be paused in order to transmit high-priority data.

Funded by

-

DARPA TTO Subterranean Challenge

Mixed Reality With Field Robots for Emergency Response

Collaborative human-robot field operations rely on timely decision-making and coordination, which can be challenging for heterogeneous teams operating in large-scale deployments. In this work, we present the design of an immersive, mixed reality (MR) interface to support sense-making and situational awareness based on the data collection capabilities of both human and robotic team members. We use a field robot to collect environment data and generate 3D reconstruction then display it in the head-mounted displays (HMD) along with the very large scale terrain. People can click a part of the terrain in an immersive environment and visualize the 3D reconstruction of some parts of the terrain. The figure shows the terrain of Boulder County and the point cloud above shows the 3D reconstruction of the blue trajectory on the terrain.

Funded by

-

NSF #1764092 Medium: Data-Mediated Communication with Proximal Robots for Emergency Response

Light source estimation

This effort addresses the problem of determining the location, direction, intensity, and color of the illuminants in a given scene. The problem has a broad range of applications in augmented reality, robust robot perception, and general scene understanding. In our research, we model complex light interactions with a custom path-tracer, capturing the effects of both direct and indirect illumination. Using a physically-based light model not only improves our estimation of the light sources, but will play a critical role in future research in surface property estimation and geometry refinement, ultimately leading to more accurate and complete scene reconstruction systems.

Publications

-

Mike Kasper, Christoffer Heckman. "Multiple Point Light Estimation from Low-Quality 3D Reconstructions". In International Conference on 3D Vision (3DV) 2019.

-

Mike Kasper, Nima Keivan, Gabe Sibley, Christoffer Heckman. "Light Source Estimation in Synthetic Images". In European Conference on Computer Vision, Virtual/Augmented Reality for Visual Artificial Intelligence Workshop 2016.

Funded by

-

Toyota grant 33643/1/ECNS20952N: Robust Perception

Parkour Cars

This project aims to develop high fidelity real-time systems for perception, planning and control of agile vehicles in challenging terrain including jumps and loop-the-loops. The current research is focused on the local planning and control problem. Due to the difficulty of the maneuvers, the planning and control systems must consider the underlying physical model of the vehicle and terrain. This style of simulation-in-the-loop planning enables very accurate prediction and correction of the vehicle state, as well as the ability to learn precise attributes of the underlying physical model.

Publications

-

Sina Aghli and Christoffer Heckman. "Terrain Aware Model Predictive Controller for Autonomous Ground Vehicles". In Robotics: Science and Systems, Bridging the Gap in Space Robotics Workshop 2017.

-

Christoffer Heckman, Nima Keivan, and Gabe Sibley. "Simulation-in-the-loop for Planning and Model-Predictive Control". In Robotics: Science and Systems, Realistic, Rapid, and Repeatable Robot Simulation Workshop 2015.

Funded by

-

NSF #1646556. CPS: Synergy: Verified Control of Cooperative Autonomous Vehicles

-

DARPA #N65236-16-1-1000. DSO Seedling: Ninja Cars

-

Toyota grant 33643/1/ECNS20952N: Robust Perception

Referring Expressions for Object Localization

Understanding references to objects based on attributes, spatial relationships, and other descriptive language expands the capability of robots to locate unknown objects (zero-shot learning), find objects in cluttered scenes, and communicate uncertainty with human collaborators. We are collecting a new set of annotations, SUNspot, for the SUNRGB-d scene understanding dataset. Unlike other referring expression datasets, SUNspot will focus on graspable objects in interior scenes accompanied by the depth sensor data and full semantic segmentation from the SUNRGB-d dataset. Using SUNspot, we hope to develop a novel referring expressions system that will improve object localization for use in human-robot interaction.

RestoreBot: Autonomous Restoration and Revegetation of Degraded Ecosystems

In collaboration with the Correll Lab and the Aridlands Research Lab at CU Boulder, we aim to develop robotic multi-agent motion planning, join visual-tactile perception, and multimodal 3D map construction techniques to support targeted seed planting in degraded rangelands. Dryland ecosystems make up 40 percent of the global land surface and support nearly one-sixth of the world's population. About 20 percent of the world's pastures have been degraded to some extent, leading to declines in a broad range of ecosystem services, ultimately threatening vulnerable communities. The RestoreBot project is applying developments in robotics and automation to reverse some of this degredation in the spirit of the UN's Decade of Restoration (https://www.decadeonrestoration.org). Our field work primarily takes place at the Canyonlands Research Center in southeastern Utah.

Funded by

-

USDA/NIFA #2021-67021-33450

Multi-Agent Force Based Motion Planning

This project is investigating how multi-agent teams can accomplish tasks using a force based motion planning algorithm. Under this formulation, groups of agents with limited computational resources navigate using a distributed motion planning paradigm and attempt to complete tasks with limited information sharing between agents.

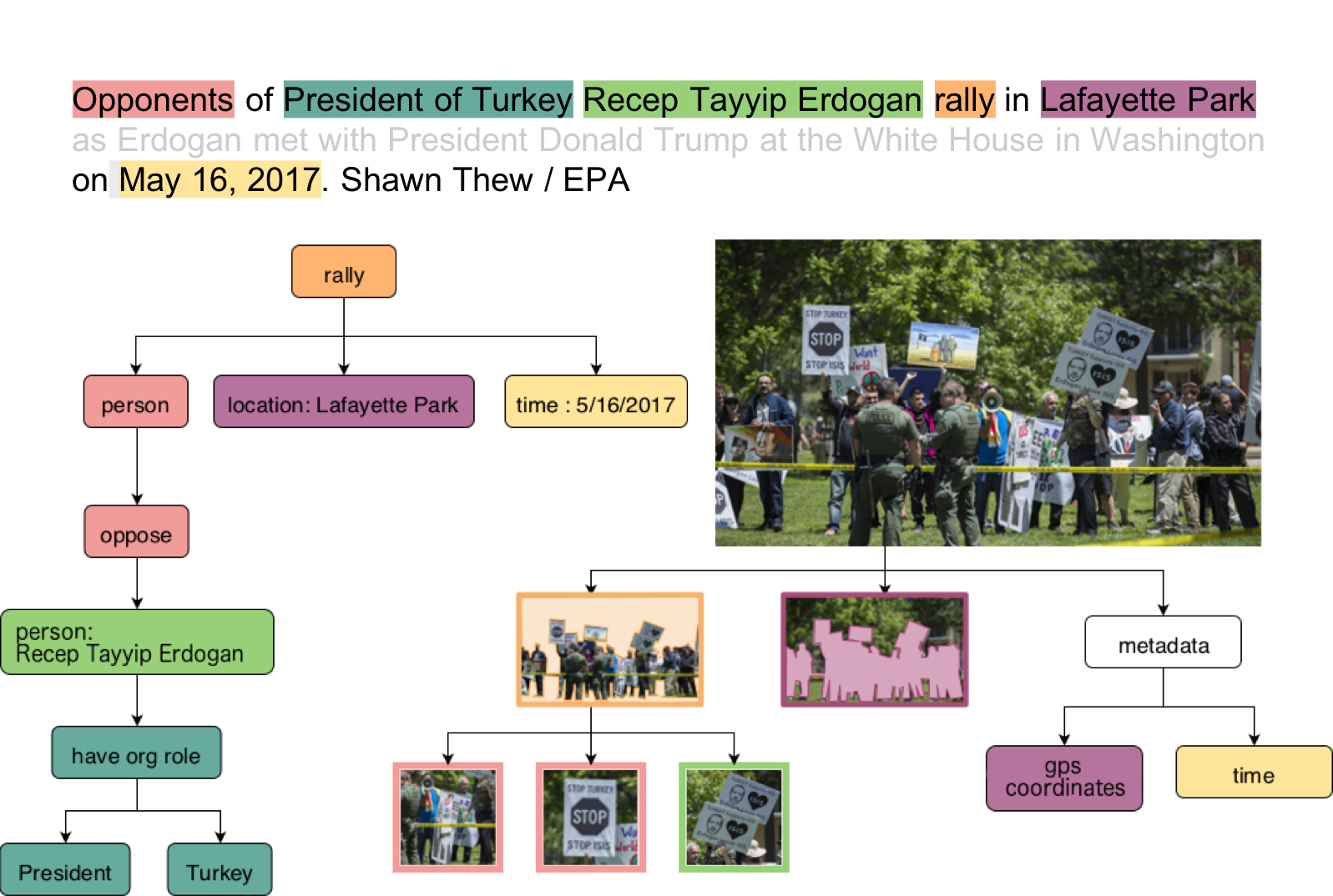

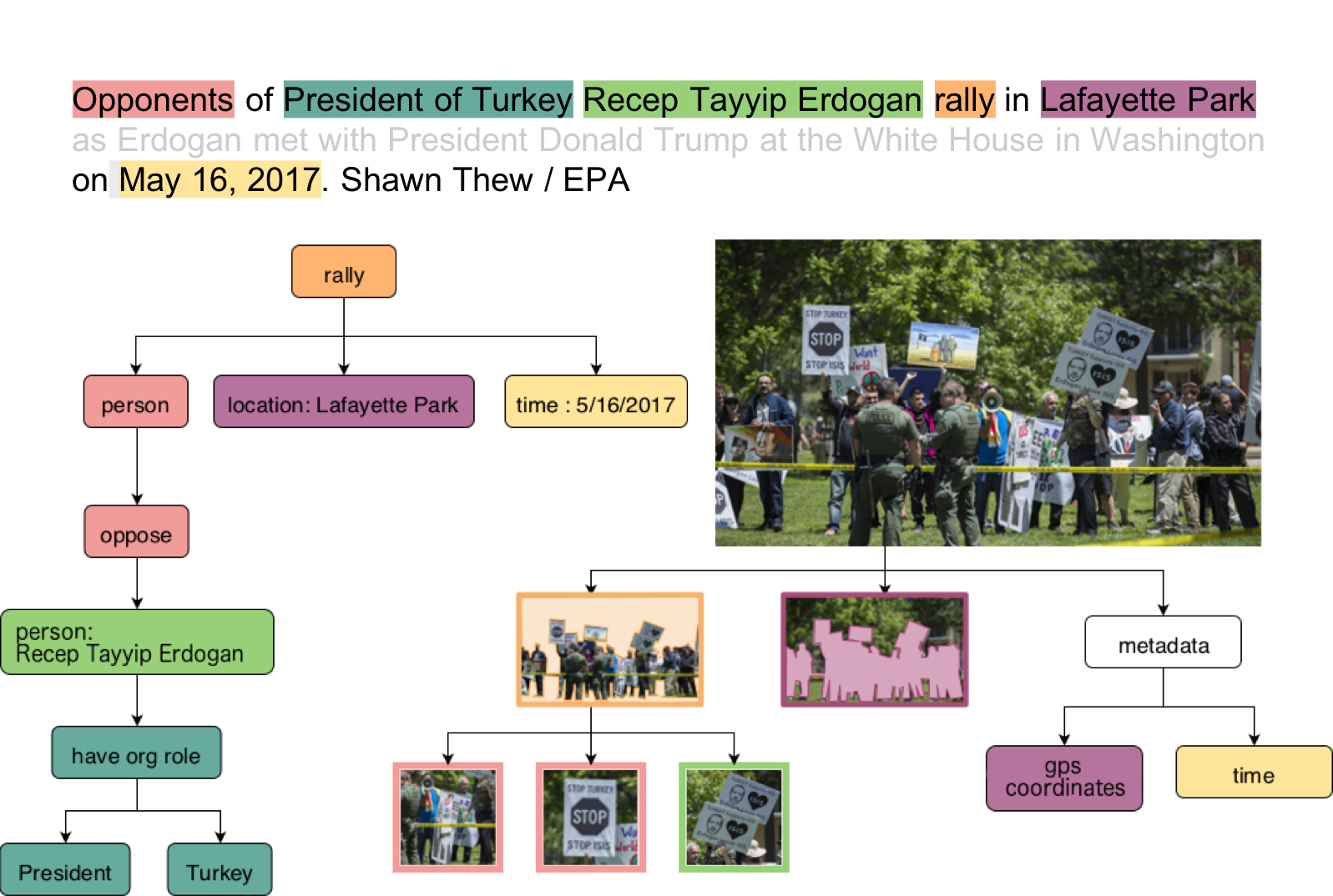

RAMFIS: Representations of Abstract Meaning for Information Synthesis

Humans can readily extract complex information from many different modalities, including spoken and written expressions and information from images and videos, and synthesize it into a coherent whole. This project aims to support automated synthesis of diverse multi-media information sources. // We are proposing a rich, multi-graph Common Semantic Representation (CSR) based on Abstract Meaning Representations (AMRs) embellished with vision and language vector representations and temporal and causal relations between events, and supported by a rich ontology of event and entity types.

Funded by

-

DARPA Award: FA8750-18-2-0016. AIDA

Compass

Compass is a simultaneous localization and mapping (SLAM) pipeline with extensible frontend capability and an optimization backend based on Ceres solver.

Publications

-

Fernando Nobre, Mike Kasper, Christoffer Heckman.. "". In IEEE International Conference on Robotics and Automation 2017.

-

Fernando Nobre, Christoffer Heckman.. "". In International Symposium on Experimental Robotics 2016.

Funded by

-

DARPA #N65236-16-1-1000. DSO Seedling: Ninja Cars

-

Toyota grant 33643/1/ECNS20952N: Robust Perception

ARPG is a component of team MARBLE, a funded participant in the DARPA Subterranean Challenge. We are providing expertise in autonomy, perception, and navigation. The project kicked off in September 2018 and is ongoing, with competition events in August 2019 onward.

Funded by

- DARPA TTO Subterranean Challenge

Millimeter wave radar has become an increasingly valuable sensor in real-world robotics applications given the long wavelengths ability to bypass particulate matter like fog, smoke, and dust. Through various projects and datasets we hope to demonstrate how to leverage the unique characteristics and datatypes available with radar to solve problems for robotic perception in less than ideal conditions through a mixture of traditional and learned techniques.

Publications

- Andrew Kramer, Kyle Harlow, Christopher Williams, Christoffer Heckman. "ColoRadar: The Direct 3D Millimeter Wave Radar Dataset". In International JJournal of Robotics Research 2021.

- Andrew Kramer, Christoffer Heckman. "Radar-Inertial State Estimation and Mapping for Micro-Aerial Vehicles In Dense Fog". In International Symposium on Experimental Robotics 2020.

- Andrew Kramer, Angel Santamaria-Navarro, Aliakbar Aghamohammadi, and Christoffer Heckman. "Radar-Inertial Ego-Velocity Estimation for Visually Degraded Environments". In International Conference on Robotics and Automation 2020.

Funded by

- NSF-NRI #1830686: 'Life-Long Learning for Motion Planning by Robots in Human Populated Environments', DARPA TT0 Subterranean Challenge, NASA NSTRF 80NSSC18K1195

This project aims to develop a dynamic mesh network for difficult communication environments. Multi-agent exploration in such environments requires a software stack that is resilient to sudden mesh topology changes while carrying a wide range of data products. This requires a full stack implementation, including adapting single-robot communications solutions such as TCPROS to facilitate mesh-based multi-robot coordination and visualization of current network topology. Our approach allows prioritization of message transfer in a ROS environment, allowing large but not time-critical messages to be paused in order to transmit high-priority data.

Funded by

- DARPA TTO Subterranean Challenge

Collaborative human-robot field operations rely on timely decision-making and coordination, which can be challenging for heterogeneous teams operating in large-scale deployments. In this work, we present the design of an immersive, mixed reality (MR) interface to support sense-making and situational awareness based on the data collection capabilities of both human and robotic team members. We use a field robot to collect environment data and generate 3D reconstruction then display it in the head-mounted displays (HMD) along with the very large scale terrain. People can click a part of the terrain in an immersive environment and visualize the 3D reconstruction of some parts of the terrain. The figure shows the terrain of Boulder County and the point cloud above shows the 3D reconstruction of the blue trajectory on the terrain.

Funded by

- NSF #1764092 Medium: Data-Mediated Communication with Proximal Robots for Emergency Response

This effort addresses the problem of determining the location, direction, intensity, and color of the illuminants in a given scene. The problem has a broad range of applications in augmented reality, robust robot perception, and general scene understanding. In our research, we model complex light interactions with a custom path-tracer, capturing the effects of both direct and indirect illumination. Using a physically-based light model not only improves our estimation of the light sources, but will play a critical role in future research in surface property estimation and geometry refinement, ultimately leading to more accurate and complete scene reconstruction systems.

Publications

- Mike Kasper, Christoffer Heckman. "Multiple Point Light Estimation from Low-Quality 3D Reconstructions". In International Conference on 3D Vision (3DV) 2019.

- Mike Kasper, Nima Keivan, Gabe Sibley, Christoffer Heckman. "Light Source Estimation in Synthetic Images". In European Conference on Computer Vision, Virtual/Augmented Reality for Visual Artificial Intelligence Workshop 2016.

Funded by

- Toyota grant 33643/1/ECNS20952N: Robust Perception

This project aims to develop high fidelity real-time systems for perception, planning and control of agile vehicles in challenging terrain including jumps and loop-the-loops. The current research is focused on the local planning and control problem. Due to the difficulty of the maneuvers, the planning and control systems must consider the underlying physical model of the vehicle and terrain. This style of simulation-in-the-loop planning enables very accurate prediction and correction of the vehicle state, as well as the ability to learn precise attributes of the underlying physical model.

Publications

- Sina Aghli and Christoffer Heckman. "Terrain Aware Model Predictive Controller for Autonomous Ground Vehicles". In Robotics: Science and Systems, Bridging the Gap in Space Robotics Workshop 2017.

- Christoffer Heckman, Nima Keivan, and Gabe Sibley. "Simulation-in-the-loop for Planning and Model-Predictive Control". In Robotics: Science and Systems, Realistic, Rapid, and Repeatable Robot Simulation Workshop 2015.

Funded by

- NSF #1646556. CPS: Synergy: Verified Control of Cooperative Autonomous Vehicles

- DARPA #N65236-16-1-1000. DSO Seedling: Ninja Cars

- Toyota grant 33643/1/ECNS20952N: Robust Perception

Understanding references to objects based on attributes, spatial relationships, and other descriptive language expands the capability of robots to locate unknown objects (zero-shot learning), find objects in cluttered scenes, and communicate uncertainty with human collaborators. We are collecting a new set of annotations, SUNspot, for the SUNRGB-d scene understanding dataset. Unlike other referring expression datasets, SUNspot will focus on graspable objects in interior scenes accompanied by the depth sensor data and full semantic segmentation from the SUNRGB-d dataset. Using SUNspot, we hope to develop a novel referring expressions system that will improve object localization for use in human-robot interaction.

In collaboration with the Correll Lab and the Aridlands Research Lab at CU Boulder, we aim to develop robotic multi-agent motion planning, join visual-tactile perception, and multimodal 3D map construction techniques to support targeted seed planting in degraded rangelands. Dryland ecosystems make up 40 percent of the global land surface and support nearly one-sixth of the world's population. About 20 percent of the world's pastures have been degraded to some extent, leading to declines in a broad range of ecosystem services, ultimately threatening vulnerable communities. The RestoreBot project is applying developments in robotics and automation to reverse some of this degredation in the spirit of the UN's Decade of Restoration (https://www.decadeonrestoration.org). Our field work primarily takes place at the Canyonlands Research Center in southeastern Utah.

Funded by

- USDA/NIFA #2021-67021-33450

This project is investigating how multi-agent teams can accomplish tasks using a force based motion planning algorithm. Under this formulation, groups of agents with limited computational resources navigate using a distributed motion planning paradigm and attempt to complete tasks with limited information sharing between agents.

Humans can readily extract complex information from many different modalities, including spoken and written expressions and information from images and videos, and synthesize it into a coherent whole. This project aims to support automated synthesis of diverse multi-media information sources. // We are proposing a rich, multi-graph Common Semantic Representation (CSR) based on Abstract Meaning Representations (AMRs) embellished with vision and language vector representations and temporal and causal relations between events, and supported by a rich ontology of event and entity types.

Funded by

- DARPA Award: FA8750-18-2-0016. AIDA

Compass is a simultaneous localization and mapping (SLAM) pipeline with extensible frontend capability and an optimization backend based on Ceres solver.

Publications

- Fernando Nobre, Mike Kasper, Christoffer Heckman.. "". In IEEE International Conference on Robotics and Automation 2017.

- Fernando Nobre, Christoffer Heckman.. "". In International Symposium on Experimental Robotics 2016.

Funded by

- DARPA #N65236-16-1-1000. DSO Seedling: Ninja Cars

- Toyota grant 33643/1/ECNS20952N: Robust Perception