Multi-robot systems (MRSs) are valuable for tasks such as search and rescue due to their ability to coordinate over shared observations. A central challenge in these systems is aligning independently collected perception data across space and time– i.e., multi-robot data association. While recent advances in collaborative SLAM (C-SLAM), map merging, and inter-robot loop closure detection have significantly progressed the field, evaluation strategies still predominantly rely on splitting a single trajectory from single-robot SLAM datasets into multiple segments to simulate multiple robots. Without careful consideration to how a single trajectory is split, this approach will fail to capture realistic pose-dependent variation in observations of a scene inherent to multi-robot systems. To address this gap, we present CU-Multi, a multi-robot dataset collected over multiple days at two locations on the University of Colorado Boulder campus. Using a single robotic platform, we generate four synchronized runs with aligned start times and deliberate percentages of trajectory overlap. CU-Multi includes RGB-D, GPS with accurate geospatial heading, and semantically annotated LiDAR data. By introducing controlled variations in trajectory overlap and dense lidar annotations, CU-Multi offers a compelling alternative for evaluating methods in multi-robot data association.

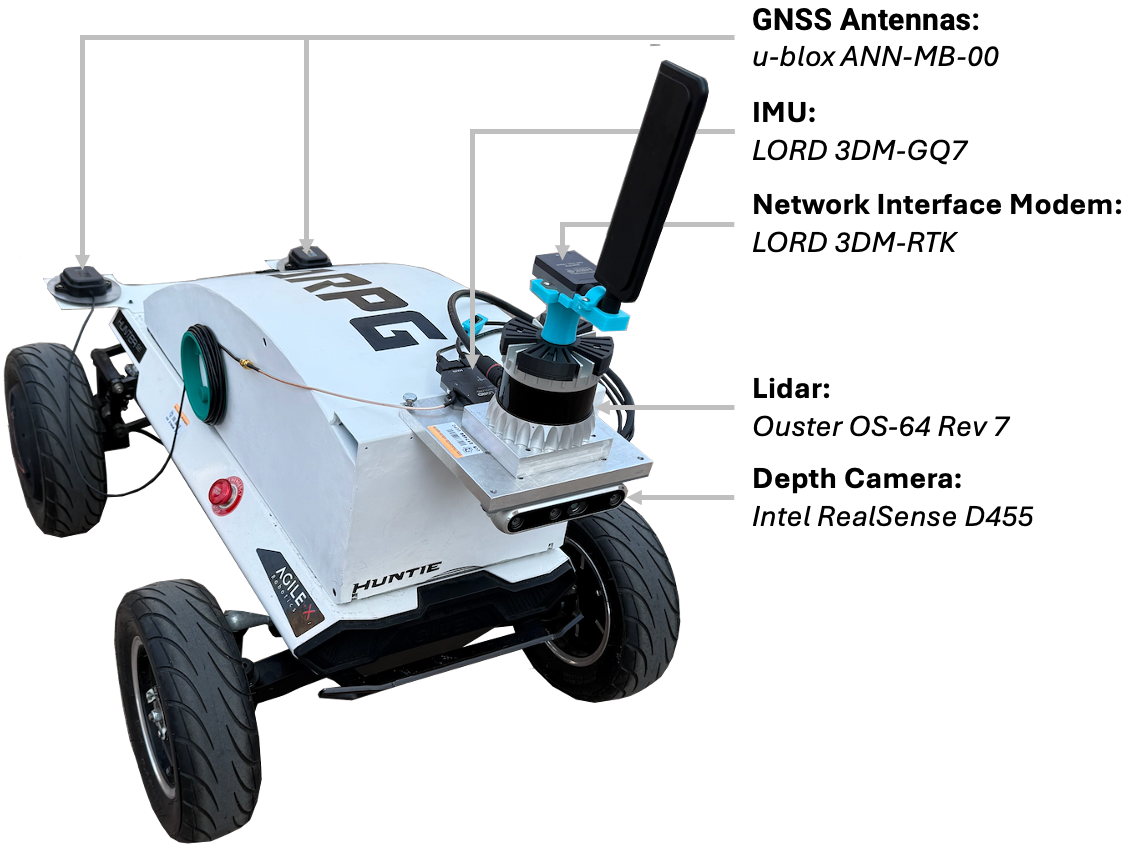

System and Sensors

We collected the CU-Multi Dataset in Boulder, Colorado at the CU Boulder campus. The CU-Multi dataset was collected using the AgileX Hunter SE platform modified with a custom-designed electronics housing (see Figure 2). Inside the housing, the system includes an Intel NUC i7 with 16 GB of RAM, a power distribution board, an Ouster interface module, and a 217 Wh battery. Externally, a mounting plate on the rear of the Hunter SE accommodates two u-blox ANN-MB-00 GNSS antennas, while a front-mounted plate holds a 64-beam Ouster LiDAR sensor, a RealSense D455 RGB-D camera, a Lord MicroStrain G7 IMU, and a Lord RTK cellular modem with an external antenna.

| Equipment | Model Name | Characteristics | Resolution | FoV | Sensor Rate |

|---|---|---|---|---|---|

| LiDAR | Ouster OS-64 | 200 m range | 64v x 1028h | 45° x 360° | 20 Hz |

| RGB-D Camera | Intel Realsense D455 | RGB: Global Shutter | 1280 × 800 | 90° x 65° | 30 Hz |

| IMU | MicroStrain 3DM-GQ7-GNSS/INS | ±8 g | 300 dps | - | 400 Hz |

| Network Interface Modem | MicroStrain 3DM-RTK Modem | 2 cm, 0.1° accuracy | - | - | 2 Hz |

| GNSS Antennas | u-blox ANN-MB-00 | - | - | - | - |

| Main Computer | Intel NUC | Intel i7 CPU @ 3.20GHz, 16GB RAM | - | - | - |

Table 1. Hardware Specifications.

Environments

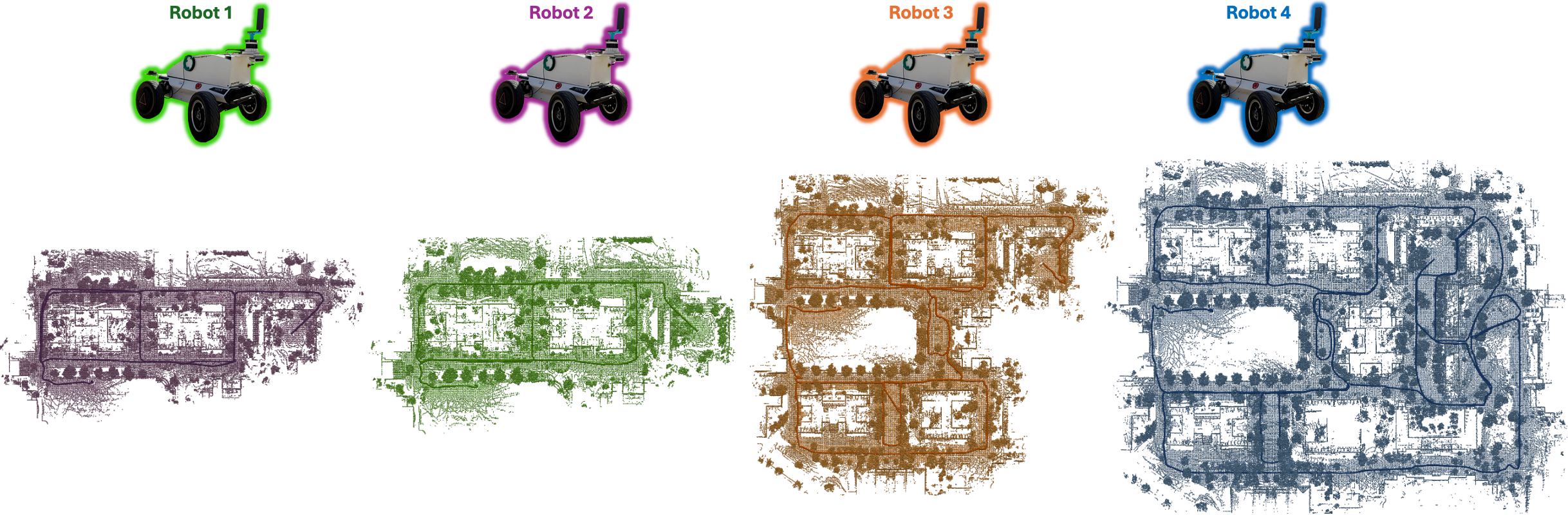

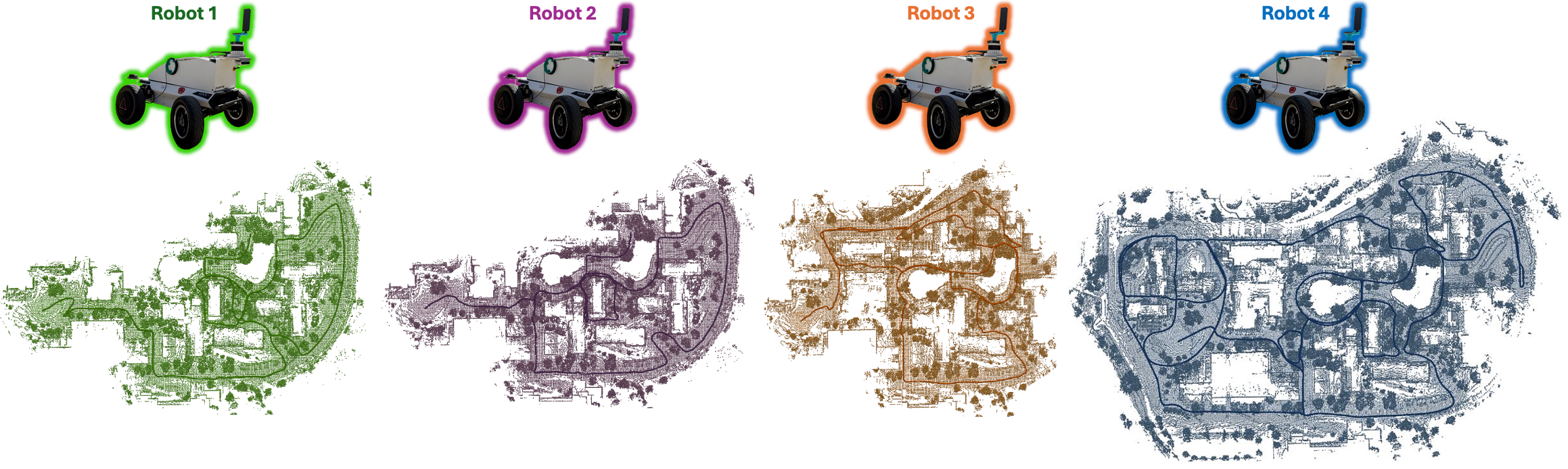

CU-Multi was collected in two areas on the CU campus. The first environment covers CU's Main Campus (main_campus), which is a dense academic setting with structured paths, buildings, and pedestrian areas. The total trajectory length for all robots on the Main Campus span approximately 7.4 kilometers (see below for individual robot trajectory lengths). The second environment, Kittredge Loop (kittredge_loop), is a more open and varied outdoor region south of the main campus. This area features less constrained terrain, dynamic objects, many parked vehicles, and contributes an additional 9.3 kilometers of trajectory data. Each environment includes four robot runs with deliberate trajectory overlap, enabling evaluation of multi-robot SLAM methods under varying levels of observational redundancy. All runs are synchronized to begin at a shared rendezvous point, and end within 4 meters of one another, supporting fine-grained inter-robot data association tasks.

Main Campus Environment (main_campus)

| Environment | Robot ID | Total Path Length |

|---|---|---|

| Main Campus | robot1 | 1295.96 m |

| robot2 | 1360.43 m | |

| robot3 | 1816.76 m | |

| robot4 | 2971.38 m |

Kittredge Loop Environment (kittredge_loop)

| Environment | Robot ID | Total Path Length |

|---|---|---|

| Kittredge Loop | robot1 | 1136.41 m |

| robot2 | 1373.37 m | |

| robot3 | 2792.06 m | |

| robot4 | 4005.75 m |